Artificial Intelligence Ethics: Why Your Job’s Future Depends on Decisions Made Today

The intersection of innovation and responsibility has never been more critical than in today’s era of rapid technological advancement. As artificial intelligence systems become embedded in everything from healthcare diagnostics to customer service chatbots, the choices made by developers, policymakers, and business leaders will directly shape the stability and prosperity of American workers. The ethical principles governing these systems determine whether AI drives inclusive growth or deepens workforce inequalities.

Every day, AI-driven tools streamline routine tasks, but without robust safeguards, they can also automate roles at a scale and speed unseen in previous industrial revolutions. Workers in technology-intensive industries, especially those early in their careers, face stark uncertainty about job continuity, career progression, and fair evaluation. Embedding fairness, accountability, and transparency at every stage of system design and deployment is essential to ensure that technological progress benefits employees, companies, and communities alike.

Meanwhile, real-world examples of biased hiring algorithms, skewed risk assessments in finance, and inequitable resource allocation in healthcare highlight the stakes of failing to prioritize ethical AI governance. Landmark lawsuits and emerging regulations illustrate how systemic oversights can translate into legal liabilities, consumer mistrust, and workforce disruption. Proactive adoption of best practices—informed by interdisciplinary expertise—can help organizations avert these pitfalls and foster a culture of responsible innovation.

Looking ahead to 2030 and beyond, the balance between automation and human skill enrichment will define economic competitiveness and social cohesion. Investing in workforce reskilling, establishing clear accountability mechanisms, and promoting inclusive design processes will enable AI to augment rather than replace human potential. By centering human-centric values in technological development, leaders can ensure that ethical AI practices deliver sustainable benefits for today’s employees and future generations.

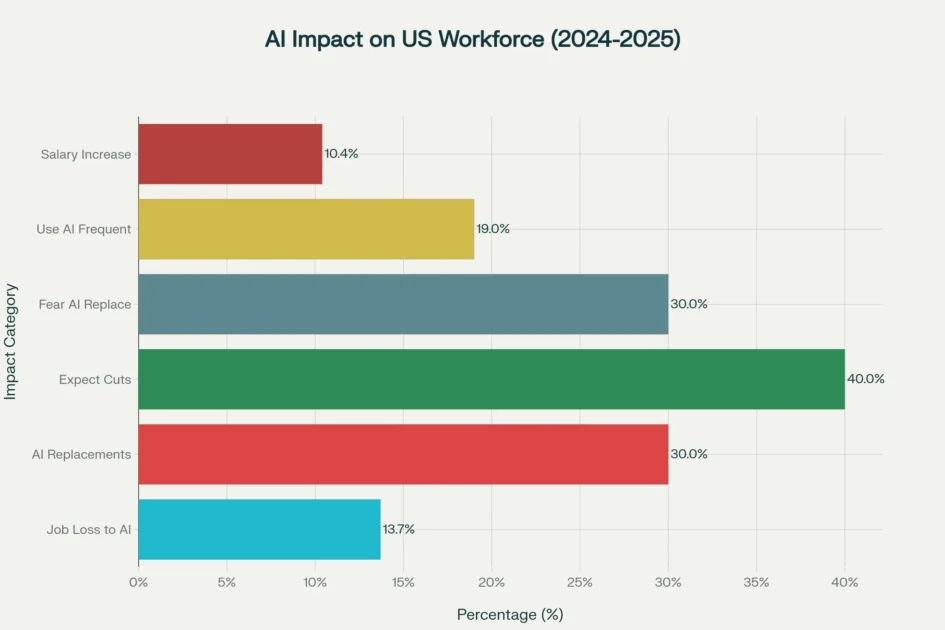

AI’s impact on US workforce showing job displacement fears vs actual usage and salary trends

Current Artificial Intelligence Ethics in America

Federal Governance and Regulatory Evolution

The United States has witnessed dramatic shifts in artificial intelligence ethics governance, particularly with the transition from the Biden to Trump administrations. The Biden administration’s Executive Order 14110, issued in October 2023, established comprehensive AI ethics requirements, mandating safety testing, bias audits, and transparency measures for AI systems used in critical sectors. This framework emphasized responsible development and required companies developing powerful AI systems to share safety test results with the government before public release.

However, the Trump administration’s Executive Order 14179, signed in January 2025, fundamentally altered this approach by revoking the previous administration’s AI safety requirements and reorienting federal policy toward innovation and competitiveness. The order explicitly directs agencies to eliminate policies that “unnecessarily hinder AI development or deployment,” signaling a shift from precautionary artificial intelligence ethics toward market-driven approaches.

This policy reversal materialized most visibly in the transformation of the AI Safety Institute into the Center for AI Standards and Innovation (CAISI) in June 2025. Commerce Secretary Howard Lutnick emphasized that “innovators will no longer be limited by these standards,” reflecting the administration’s belief that AI ethics regulations had become barriers to American technological leadership rather than essential safeguards.

Timeline of major US Artificial Intelligence ethics and governance milestones from 2020-2025

The Fragmented Regulatory Environment

Unlike the European Union’s comprehensive AI Act, the United States maintains a decentralized approach to AI ethics governance. Federal agencies apply existing laws to AI systems rather than creating AI-specific regulations, resulting in a patchwork of sector-specific guidance from agencies like the Federal Trade Commission, Equal Employment Opportunity Commission, and Department of Justice.

National Institute of Standards and Technology (NIST)

The National Institute of Standards and Technology (NIST) AI Risk Management Framework serves as the primary voluntary guidance for organizations seeking to implement artificial intelligence ethics principles. This framework emphasizes four core functions: Govern, Map, Measure, and Manage, providing structured approaches for identifying and mitigating AI risks throughout system lifecycles. However, adoption remains voluntary, with only 26% of companies having developed sufficient capabilities to move beyond AI proof-of-concepts toward meaningful implementation.

State-level initiatives have emerged to fill federal gaps, with California, New York, Illinois, and Colorado enacting their own AI ethics requirements. These laws typically focus on algorithmic auditing in employment, housing, and consumer applications, creating compliance complexities for multi-state operations.

Employment Displacement and the Artificial Intelligence Ethics Imperative

Quantifying the Current Impact

Recent data reveals that artificial intelligence ethics failures are already generating measurable employment consequences across American industries. According to comprehensive analysis by Goldman Sachs Research, AI adoption could displace 6-7% of the US workforce, with some estimates ranging from 3% to 14% depending on implementation approaches. The Federal Reserve Bank of St. Louis found a striking 0.47 correlation between occupational AI exposure and unemployment rate increases from 2022 to 2025, with computer and mathematical occupations experiencing the steepest job losses despite their high AI exposure scores.

The employment impact varies dramatically by sector and worker demographics. Younger tech workers have been disproportionately affected, with unemployment among 20- to 30-year-olds in tech-exposed occupations rising by nearly 3 percentage points since early 2025. This pattern suggests that AI ethics considerations around workforce transition support have been inadequate, particularly for early-career professionals who lack the experience to pivot quickly to AI-complementary roles.

Correlation between Artificial Intelligence exposure and unemployment rate increases across US occupations (2022-2025)

Manufacturing workers have experienced sustained displacement, with 1.7 million jobs lost to automation since 2000, while recent AI implementations have accelerated this trend. However, sectors implementing stronger artificial intelligence ethics frameworks have demonstrated more successful human-AI collaboration models, with productivity gains of 15% when AI is fully integrated with appropriate workforce development programs.

The Bias Problem: When Artificial Intelligence Ethics Fails

The most immediate employment consequences of inadequate artificial intelligence ethics emerge through algorithmic bias in hiring, promotion, and workplace evaluation systems. University of Washington research demonstrated that AI resume screening tools preferred white-associated names in 85% of cases compared to only 9% for Black-associated names, while also showing significant gender bias favoring male candidates. These findings have translated into real-world discrimination with substantial legal consequences.

The landmark Mobley v. Workday case represents the first major class action lawsuit challenging AI hiring systems under federal anti-discrimination laws. Derek Mobley, a Black man over 40 with reported anxiety and depression, applied to over 100 positions through Workday’s AI screening platform and was rejected from nearly every application without interviews. The Northern District of California certified this as a collective action in May 2025, establishing legal precedent that AI vendors can be held liable for discriminatory outcomes even when they don’t directly employ job candidates.

AI Bias Litigation Cases

| Case Name | Year | AI System Type | Discrimination Type | Legal Theory | Outcome / Status | Key Impact |

| Mobley v. Workday | 2023-2025 (Ongoing) | HR Screening Platform | Age, Race, Disability | Disparate Impact (ADEA, ADA, Title VII) | Class Action Certified | First major AI hiring class action |

| iTutor Group Settlement | 2022-2023 (Settled) | Automated Hiring Filter | Age, Gender | Age/Sex Discrimination | $365,000 Settlement | Automated rejection based on age |

| Huskey v. State Farm | 2023 (Ongoing) | Insurance Fraud Detection | Race (Housing) | Fair Housing Act Violation | Motion to Dismiss Denied | AI bias in insurance claims |

| ACLU v. HireVue/Intuit | 2025 | Video Interview Analysis | Disability, Race | ADA, Title VII, State Law | EEOC Complaint Filed | Accessibility in AI interviews |

| Open Communities v. Harbor Group | 2023 (Settled) | Rental Application Screening | Housing Choice Vouchers | Fair Housing Act | Settled – Compliance Required | Housing discrimination via AI |

Similar cases across industries demonstrate the pervasive nature of AI bias when artificial intelligence ethics protections are insufficient. State Farm faces litigation alleging that its AI fraud detection algorithms create disparate impacts on Black policyholders through biased risk assessment models. HireVue and Intuit confront EEOC complaints claiming their AI interview systems discriminate against deaf applicants and speakers of non-standard English dialects. These cases collectively illustrate how AI ethics failures create legal liability extending far beyond technical performance metrics.

The Transformation of Work: Beyond Job Loss to Job Evolution

Human-AI Collaboration as an Ethical Framework

The future of work increasingly depends on implementing artificial intelligence ethics principles that prioritize human-AI collaboration over simple replacement models. Research by PwC’s 2025 Global AI Jobs Barometer reveals that industries most exposed to AI demonstrate three times higher growth in revenue per employee, suggesting that appropriate AI ethics frameworks can create value rather than merely reduce costs. Workers with AI collaboration skills command 56% wage premiums, up from 25% in the previous year, indicating strong market demand for human-AI partnership capabilities.

The World Economic Forum projects that work tasks will be distributed as 47% primarily human, 22% mainly technological, and 30% collaborative by 2030. This distribution requires artificial intelligence ethics frameworks that optimize human strengths in creativity, emotional intelligence, and complex problem-solving while enabling AI to handle data processing, pattern recognition, and routine analysis. Companies successfully implementing such frameworks report 44% better performance on employee retention and revenue growth metrics compared to organizations pursuing skills-based rather than operational AI integration.

Emerging roles like AI trainers, prompt engineers, human-AI interface designers, and AI ethics specialists represent entirely new career categories that didn’t exist before 2023. These positions typically offer salaries ranging from $95,000 to $225,000 annually, demonstrating that AI ethics-driven job creation can provide economic advancement opportunities for workers who develop appropriate skills.

Reskilling and the Artificial Intelligence Ethics of Workforce Development

The rapid pace of AI-driven change places ethical obligations on employers and policymakers to provide workforce transition support. LinkedIn data indicates that skills requirements are changing 66% faster in AI-exposed occupations compared to traditional roles, accelerating from 25% in the previous year. This acceleration rate suggests that AI ethics frameworks must include proactive reskilling components rather than reactive displacement responses.

Leading organizations have implemented comprehensive reskilling programs aligned with AI ethics principles. AT&T’s Future Ready initiative represents a billion-dollar, multi-year investment in personalized skills development, recognizing that hiring new talent alone cannot address the scale of workforce transformation required. Amazon’s Upskilling 2025 program provides AI training to hundreds of thousands of employees while offering public education through its AI Ready courses. JPMorgan Chase now requires AI training for all new hires and has established university partnerships to build AI literacy across financial services.

These corporate initiatives demonstrate that artificial intelligence ethics extends beyond algorithmic fairness to include organizational responsibility for workforce adaptation. Companies implementing robust reskilling programs report higher employee retention rates and stronger innovation outcomes, suggesting that ethical workforce development creates competitive advantages rather than mere cost centers.

Projected AI impact on US job market by 2030 – risks, transformations, and opportunities

Regulatory Frameworks and Their Employment Implications

The NIST Artificial Intelligence Ethics Framework in Practice

The NIST AI Risk Management Framework provides the most comprehensive voluntary guidance for implementing artificial intelligence ethics in organizational settings. The framework’s four core functions—Govern, Map, Measure, and Manage—create structured approaches for identifying employment-related AI risks and implementing appropriate safeguards. Organizations following NIST guidance report more successful AI implementations with fewer bias-related incidents and stronger employee trust levels.

Case studies demonstrate the framework’s practical value for employment protection. A mid-sized commercial cleaning company implemented NIST AI RMF protocols for their customer service AI system, establishing governance committees, bias monitoring systems, and human oversight requirements. The implementation required six weeks and approximately $15,000 in consulting costs but resulted in measurable improvements in system fairness and employee confidence in AI-augmented workflows.

However, NIST framework adoption remains limited, with many organizations citing resource constraints and competing priorities. Smaller companies particularly struggle with the framework’s comprehensive requirements, creating AI ethics gaps that disproportionately affect workers in mid-sized and smaller enterprises. This disparity suggests that voluntary approaches may be insufficient to ensure consistent artificial intelligence ethics protection across the full spectrum of American workplaces.

Legal Evolution and Disparate Impact Theory

The legal landscape surrounding artificial intelligence ethics in employment continues evolving through court decisions and regulatory guidance. The joint statement from the FTC, EEOC, Consumer Financial Protection Bureau, and Department of Justice clarifying that existing anti-discrimination laws apply to AI systems has created enforceable AI ethics requirements even without specific AI legislation.

Disparate impact theory has emerged as the primary legal framework for challenging AI employment discrimination. This doctrine allows workers to demonstrate discrimination through statistical evidence of differential outcomes across protected groups without proving intentional bias. The success of disparate impact claims in cases like Mobley v. Workday establishes precedent that artificial intelligence ethics violations can generate substantial legal liability for both AI developers and implementing organizations.

However, the Trump administration’s directive to federal agencies to eliminate disparate impact enforcement creates uncertainty about government-led AI ethics protection. While private litigation remains unaffected, reduced federal enforcement may shift responsibility to state agencies and individual legal challenges, potentially creating inconsistent protection levels across different jurisdictions.

Industry-Specific Artificial Intelligence Ethics Challenges

Healthcare: Life-and-Death Ethics Decisions

Healthcare represents one of the most critical sectors for artificial intelligence ethics implementation, where algorithmic bias can directly impact patient outcomes and healthcare worker employment security. A widely-used healthcare risk prediction algorithm affecting over 200 million Americans demonstrated systematic bias favoring white patients over Black patients by using healthcare spending as a proxy for medical need. This approach failed to account for socioeconomic factors that reduce healthcare access for minority populations, creating AI ethics failures with life-threatening consequences.

Healthcare AI implementations

Healthcare AI implementations also reshape medical professional roles in ways that require careful artificial intelligence ethics consideration. AI diagnostic systems can process medical imaging faster than radiologists but lack the contextual understanding and patient interaction skills that define quality healthcare. Successful implementations focus on augmenting rather than replacing healthcare professionals, but this requires AI ethics frameworks that prioritize human oversight and maintain meaningful professional roles.

The integration of AI

The integration of AI in healthcare hiring and workforce management creates additional artificial intelligence ethics complexities. Automated resume screening for medical positions must account for diverse educational backgrounds and non-traditional career paths while maintaining quality standards. Healthcare organizations implementing AI workforce tools report improved efficiency but require robust bias auditing to ensure equitable opportunities for healthcare professionals from underrepresented backgrounds.

Financial Services: Artificial Intelligence Ethics and Economic Access

Financial services face unique artificial intelligence ethics challenges due to the sector’s central role in economic opportunity and wealth building. AI systems used for credit decisions, insurance underwriting, and investment advice directly determine Americans’ access to economic mobility tools, making AI ethics implementation essential for maintaining fair economic systems.

The correlation between AI exposure and employment disruption appears particularly pronounced in financial services, with 32% of finance workers reporting frequent AI use and unemployment rising in AI-exposed financial roles. However, financial institutions successfully implementing artificial intelligence ethics frameworks report stronger customer trust and regulatory compliance while maintaining competitive advantages through improved risk assessment and customer service capabilities.

State Farm’s ongoing litigation over allegedly biased AI fraud detection illustrates how AI ethics failures in financial services can create both legal liability and systemic inequities. The case alleges that AI algorithms using biometric, behavioral, and housing data as proxies for race subjected Black policyholders to additional scrutiny and delays, demonstrating how seemingly neutral technical criteria can perpetuate discriminatory outcomes without appropriate artificial intelligence ethics safeguards.

Technology Sector: Leading by Example or Cautionary Tale

The technology sector faces particular scrutiny regarding artificial intelligence ethics implementation given its role in developing AI systems used across other industries. Major technology companies have implemented various AI ethics initiatives, but results remain mixed regarding employment protection and bias prevention.

Microsoft has established AI ethics principles emphasizing transparency, third-party testing, and bias mitigation, while reporting improved accuracy across diverse demographic groups in facial recognition systems. Amazon imposed a moratorium on law enforcement use of its Rekognition facial recognition technology and has invested heavily in bias testing and validation processes. These initiatives demonstrate corporate recognition that artificial intelligence ethics implementation affects both social responsibility and business sustainability.

However, the technology sector has also experienced significant AI-related job displacement, with 77,999 tech job losses directly linked to AI from January through June 2025. This displacement pattern suggests that even organizations leading AI ethics development may struggle to implement these principles in their own workforce management practices, highlighting the complexity of translating ethical frameworks into operational employment policies.

Future Workforce Implications and Strategic Recommendations

Preparing for AI-Driven Career Evolution

The evidence strongly indicates that successful career navigation in an AI-driven economy requires understanding and actively engaging with artificial intelligence ethics principles. Workers who develop skills in AI collaboration, ethical AI implementation, and human-AI interface design position themselves for roles that complement rather than compete with AI capabilities. These emerging career paths offer substantial economic opportunities, with AI collaboration specialists earning median salaries exceeding $160,000 annually.

Professional development strategies should emphasize skills that remain distinctly human while incorporating AI literacy. Creative problem-solving, emotional intelligence, ethical reasoning, and strategic thinking represent capabilities that AI cannot easily replicate and that become more valuable as AI handles routine analytical tasks. Workers developing these competencies alongside technical AI skills create career resilience that transcends specific technological implementations.

Educational institutions and training programs must integrate artificial intelligence ethics education throughout curriculum design rather than treating it as a separate subject. Understanding bias detection, algorithmic fairness, and responsible AI development becomes essential professional competency across fields from healthcare to finance to education. Early adoption of these skills provides competitive advantages as organizations seek employees capable of implementing AI systems responsibly.

Organizational Artificial Intelligence Ethics Implementation

Organizations seeking to navigate AI adoption while protecting workforce interests must implement comprehensive artificial intelligence ethics frameworks from the outset of AI initiatives. The NIST AI Risk Management Framework provides structured approaches, but successful implementation requires sustained organizational commitment and cross-functional collaboration between technical, legal, and human resources teams.

Proactive bias auditing and algorithmic impact assessment should become standard practices for any AI system affecting employment decisions, customer interactions, or service delivery. Organizations implementing regular bias testing report fewer discrimination incidents and stronger employee and customer trust levels. These auditing processes also provide legal protection by demonstrating good-faith efforts to prevent discriminatory outcomes.

Human oversight requirements and meaningful human involvement in AI-driven decisions represent essential artificial intelligence ethics safeguards. Successful implementations maintain human decision-making authority while using AI to enhance rather than replace human judgment. This approach requires clear protocols for when human intervention is required and training for staff members who oversee AI-augmented processes.

Policy and Regulatory Recommendations

The current fragmented approach to artificial intelligence ethics regulation creates compliance complexities and inconsistent worker protection levels across different sectors and jurisdictions. Federal legislation establishing baseline artificial intelligence ethics requirements would provide clarity while allowing sector-specific implementation flexibility. Such legislation should emphasize transparency, bias auditing, and workforce transition support rather than prescriptive technical requirements that could stifle innovation.

State and local governments should coordinate artificial intelligence ethics initiatives to prevent regulatory arbitrage while allowing policy experimentation. The emergence of differing state requirements creates compliance burdens for multi-jurisdictional organizations but also enables testing different approaches to artificial intelligence ethics implementation. Successful state initiatives should inform federal policy development rather than creating permanent regulatory fragmentation.

Investment in workforce development and reskilling programs represents essential policy infrastructure for managing AI-driven economic transition. Public-private partnerships similar to South Carolina’s readySC and Apprenticeship Carolina programs provide models for scaled workforce development that addresses both individual worker needs and regional economic development objectives. These programs should integrate artificial intelligence ethics education to ensure that workforce development creates AI-literate professionals capable of implementing responsible AI practices.

Conclusion

As the rapid integration of Artificial Intelligence Ethics frameworks into organizational decision-making demonstrates, the choices we make now will chart the course for workforce inclusivity and stability over the next decade. By aligning AI development with principles of fairness, accountability, and transparency, companies can mitigate legal risks, maintain employee trust, and foster a more resilient labor market. Effective governance transforms AI from a disruptive force into a catalyst for sustainable growth and innovation.

Yet, the data make clear that without vigilant oversight, automation may widen existing economic disparities—underscoring the need for a human-centered approach grounded in Artificial Intelligence Ethics. Proven reskilling programs, bias audits, and inclusive design processes serve as ethical guardrails, ensuring that technological progress does not outpace our capacity to support displaced workers and underrepresented groups. Embedding these practices at every stage of AI lifecycle management is both a moral imperative and a strategic advantage.

At the policy level, coordinated federal and state initiatives must solidify baseline requirements for algorithmic fairness, workforce transition support, and meaningful human oversight—principles at the heart of responsible Artificial Intelligence Ethics. Legislative clarity reduces compliance complexity for multi-state employers while fostering innovation through consistent, predictable standards. Public–private partnerships that integrate ethics education into workforce development will prepare American workers to excel alongside AI systems rather than be sidelined by them.

Ultimately, the future of work in the United States hinges on our collective commitment to ethical AI deployment. Organizations that embrace Artificial Intelligence Ethics today will unlock new opportunities for collaboration, drive equitable economic outcomes, and build enduring trust with stakeholders. The decisions made now will resonate for years to come, determining whether AI serves as a tool for human empowerment or a source of division—and it is our responsibility to ensure it is the former.

Citations

- https://www.nu.edu/blog/ai-job-statistics/

- https://www.goldmansachs.com/insights/articles/how-will-ai-affect-the-global-workforce

- https://www.diligent.com/resources/blog/ai-regulations-in-the-us

- https://iapp.org/resources/article/us-federal-ai-governance/

- https://www.anecdotes.ai/learn/ai-regulations-in-2025-us-eu-uk-japan-china-and-more

- https://www.capacitymedia.com/article/trump-overhauls-biden-ai-safety-institute-to-create-new-innovation-centre

- https://www.nextgov.com/artificial-intelligence/2025/06/commerce-rebrands-its-ai-safety-institute/405803/

- https://www.whitecase.com/insight-our-thinking/ai-watch-global-regulatory-tracker-united-states

- https://www.nist.gov/itl/ai-risk-management-framework

- https://www.netsolutions.com/insights/nist-ai-rmf-case-study/

- https://www.ncsl.org/technology-and-communication/artificial-intelligence-2025-legislation

- https://www.stlouisfed.org/on-the-economy/2025/aug/is-ai-contributing-unemployment-evidence-occupational-variation

- https://explodingtopics.com/blog/ai-replacing-jobs

- https://www.quinnemanuel.com/the-firm/publications/when-machines-discriminate-the-rise-of-ai-bias-lawsuits/

- https://sanfordheisler.com/blog/2024/09/was-i-discriminated-against-by-ai-part-iii-what-does-a-lawsuit-for-employment-discrimination-look-like/

- https://www.fisherphillips.com/en/news-insights/discrimination-lawsuit-over-workdays-ai-hiring-tools-can-proceed-as-class-action-6-things.html

- https://www.pwc.com/gx/en/issues/artificial-intelligence/job-barometer/2025/report.pdf

- https://blog.theinterviewguys.com/the-rise-of-human-ai-collaboration/

- https://www.ibm.com/think/insights/ai-and-the-future-of-work

- https://www.weforum.org/stories/2025/04/linkedin-strategic-upskilling-ai-workplace-changes/

- https://eightfold.ai/blog/reskilling-and-upskilling/

- https://camoinassociates.com/resources/how-ai-is-impacting-the-us-workforce/

- https://www.ispartnersllc.com/it-assurance/nist-ai-rmf/

- https://www.lumenova.ai/blog/pros-and-cons-of-implementing-the-nist-ai-risk-management-framework/

- https://www.brookings.edu/articles/the-legal-doctrine-that-will-be-key-to-preventing-ai-discrimination/

- https://research.aimultiple.com/ai-bias/

- https://www.paubox.com/blog/real-world-examples-of-healthcare-ai-bias

- https://www.nature.com/articles/s41599-023-02079-x

- https://www.gallup.com/workplace/691643/work-nearly-doubled-two-years.aspx

- https://learn.g2.com/ethics-of-facial-recognition

- https://www.salesforce.com/agentforce/human-ai-collaboration/

- https://talentsprint.com/blog/ethical-ai-2025-explained

- https://kanerika.com/blogs/ai-ethical-concerns/

- https://en.wikipedia.org/wiki/Ethics_of_artificial_intelligence

- https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

- https://www.intelligence.gov/ai/ai-ethics-framework

- https://www.unesco.org/en/forum-ethics-ai

- https://www.jpmorgan.com/insights/global-research/artificial-intelligence/ai-impact-job-growth

- https://www.ibm.com/think/insights/ai-ethics-and-governance-in-2025

- https://www.reuters.com/world/us/americans-fear-ai-permanently-displacing-workers-reutersipsos-poll-finds-2025-08-19/

- https://www.congress.gov/crs-product/R48555

- https://www.unibo.it/en/study/course-units-transferable-skills-moocs/course-unit-catalogue/course-unit/2024/446601

- https://www.brookings.edu/articles/new-data-show-no-ai-jobs-apocalypse-for-now/

- https://ojs.victoria.ac.nz/wfeess/article/download/7664/6811/10787

- https://www.proskauer.com/blog/job-applicants-algorithmic-bias-discrimination-lawsuit-survives-motion-to-dismiss

- https://www.washington.edu/news/2024/10/31/ai-bias-resume-screening-race-gender/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8320316/

- https://rfkhumanrights.org/our-voices/bias-in-code-algorithm-discrimination-in-financial-systems/

- https://research.google/pubs/saving-face-investigating-the-ethical-concerns-of-facial-recognition-auditing/

- https://www.scu.edu/ethics/focus-areas/technology-ethics/resources/examining-the-ethics-of-facial-recognition/

- https://www.ohchr.org/en/stories/2024/07/racism-and-ai-bias-past-leads-bias-future

- https://www.unesco.org/en/artificial-intelligence/recommendation-ethics/cases

- https://esdst.eu/the-ethics-of-facial-recognition-a-necessary-debate-in-the-age-of-surveillance/

- https://publications.lawschool.cornell.edu/jlpp/2024/11/21/ai-hr-algorithmic-discrimination-in-the-workplace/

- https://www.ispp.org.in/ethics-of-ai-in-public-policy-in-the-indian-context/

- https://www.urmconsulting.com/information-security/artificial-intelligence/nist-ai-rmf

- https://www.trust.org/toolkit/ai-governance-for-africa-part-1/7-ai-governance-in-united-states/

- https://www.forbes.com/sites/thomasbrewster/2025/06/03/the-wiretap-trump-says-goodbye-to-the-ai-safety-institute/

- https://www.splunk.com/en_us/blog/learn/ai-governance.html

- https://deadline.com/2025/06/ai-safety-institute-trump-howard-lutnick-1236424299/

- https://www.whitehouse.gov/wp-content/uploads/2025/07/Americas-AI-Action-Plan.pdf

- https://www.practical-devsecops.com/best-ai-security-frameworks-for-enterprises/

- https://fedscoop.com/trump-administration-rebrands-ai-safety-institute-aisi-caisi/

- https://2021-2025.state.gov/artificial-intelligence/

- https://www.axios.com/pro/tech-policy/2025/05/29/ai-safety-institute-renaming

- https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

- https://smythos.com/managers/hr/human-ai-collaboration-and-job-displacement/

- https://www.workday.com/en-us/perspectives/artificial-intelligence/2025/08/25-ways-ai-will-change-the-future-of-work.html

- https://www.ibm.com/think/insights/ai-upskilling

- https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work

- https://www.onrec.com/news/opinion/how-ai-is-transforming-workforce-reskilling-in-2025

- https://www.sciencedirect.com/science/article/pii/S0166497223000585

- https://www.nexford.edu/insights/how-will-ai-affect-jobs

- https://professional.dce.harvard.edu/blog/how-to-keep-up-with-ai-through-reskilling/

- https://blog.workday.com/en-us/2025-ai-trends-outlook-the-rise-of-human-ai-collaboration.html

- https://hbr.org/2023/09/reskilling-in-the-age-of-ai