Artificial Intelligence Ethics Just Shocked Silicon Valley CEOs (The Debate Nobody’s Talking About)

Silicon Valley’s serene facade shattered in 2024 when the term Artificial Intelligence Ethics moved from academic journals to CEO memos, boardroom confrontations, and emergency regulatory hearings. What began as a niche discussion among scholars morphed into an existential crisis for technology giants as missteps and controversies forced ethics into the spotlight. No longer optional or theoretical, Artificial Intelligence Ethics became the metric by which companies measured public trust and legal vulnerability.

The debate around Artificial Intelligence Ethics has unearthed deep divides within Silicon Valley’s leadership ranks, pitting growth-driven imperatives against cautionary principles. Executives once celebrated for pushing the boundaries of innovation now find themselves scrambling to answer tough questions about data privacy, algorithmic bias, and the real-world impacts of their systems. In this high-stakes environment, Artificial Intelligence Ethics is not merely an afterthought but a strategic imperative influencing corporate agendas and valuation.

Across the United States, consumers and lawmakers have watched this drama unfold with growing alarm, demanding transparency and accountability. The controversies from CEO whistleblower allegations at OpenAI to high-profile copyright lawsuits against Meta, underscore how central Artificial Intelligence Ethics has become to maintaining legitimacy in the digital age. Each new scandal further erodes public trust and raises the stakes for companies that fail to integrate ethical frameworks into their core operations.

Ultimately, the seismic shock to Silicon Valley CEOs serves as a wake-up call: without robust Artificial Intelligence Ethics standards, even the most cutting-edge technologies can unravel reputations, trigger legal upheavals, and invite stringent regulation. As this article explores, the debate few wanted to acknowledge is now unavoidable, and its outcome will shape the future of AI development in the United States and beyond.

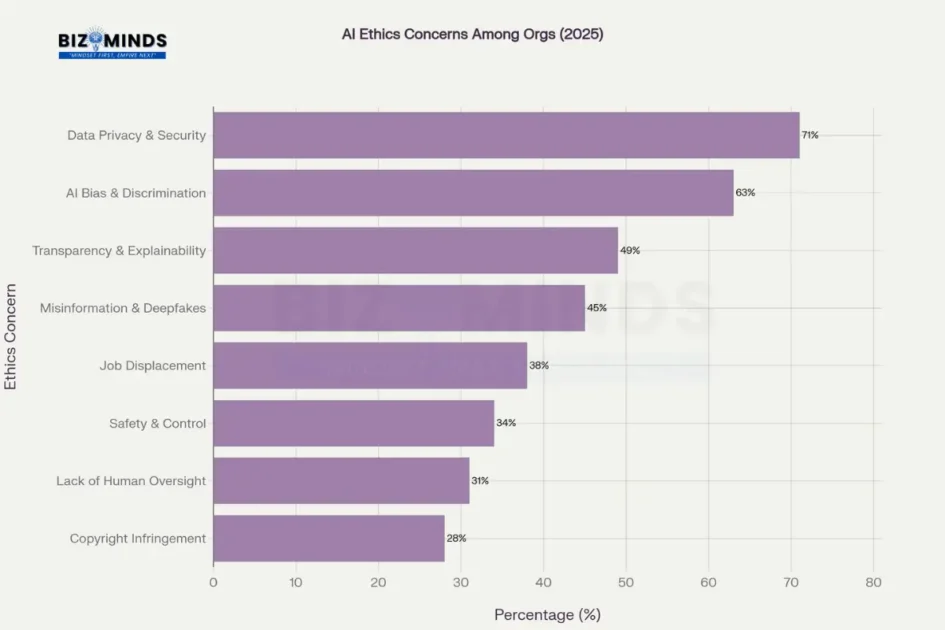

Top AI ethics concerns reported by organizations in 2025, showing data privacy and AI bias as the leading issues

The Tipping Point for Artificial Intelligence Ethics in Silicon Valley

The year 2024 marked a watershed moment for artificial intelligence ethics in the United States. What began as philosophical discussions in academic circles has morphed into a full-scale crisis management exercise for Silicon Valley’s most powerful executives. The dramatic increase in AI-related incidents, CEO controversies, and legal actions reveals an industry grappling with the unintended consequences of rapid technological advancement.

How Senate Bill 1047 Advanced Artificial Intelligence Ethics Debate in California

California’s controversial Senate Bill 1047, though ultimately vetoed by Governor Gavin Newsom, served as a lightning rod that exposed deep fractures within the tech community. The proposed legislation would have required rigorous safety testing for AI models costing over $100 million to develop, mandating “kill switch” mechanisms and third-party audits. The fierce opposition from industry giants like OpenAI, Google, and Meta highlighted the fundamental tension between innovation and accountability.

“The veto leaves us with the troubling reality that companies aiming to create an extremely powerful technology face no binding restrictions from U.S. policymakers,” wrote California State Senator Scott Wiener, the bill’s author. This sentiment encapsulates the growing frustration among regulators and ethicists who view the current self-regulatory approach as insufficient.

Timeline showing the dramatic increase in AI ethics incidents, CEO controversies, and legal actions in Silicon Valley from 2020-2025

How Artificial Intelligence Ethics Eroded Public Trust in Tech

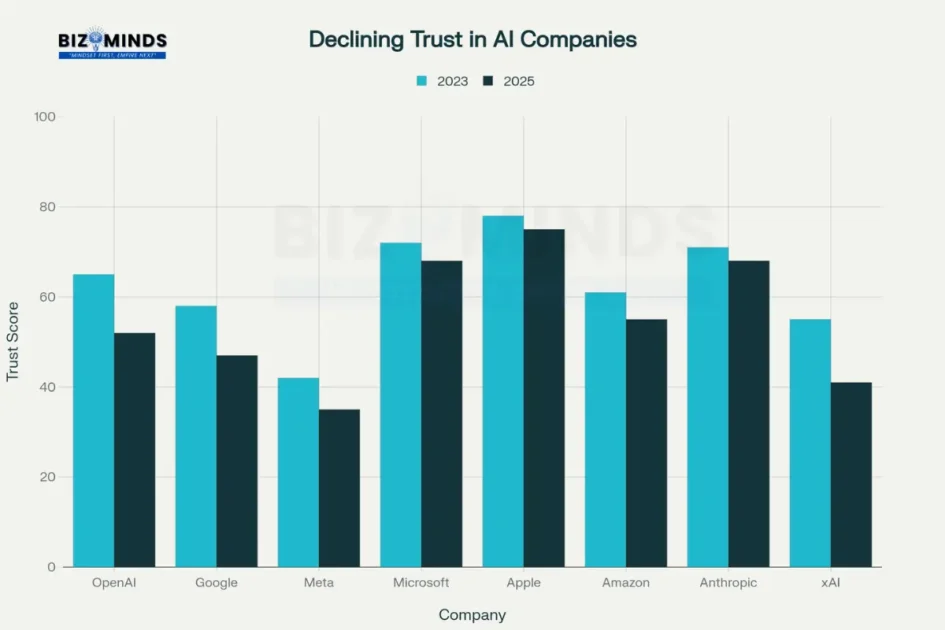

Consumer confidence in artificial intelligence companies has experienced a precipitous decline, with trust scores falling across every major tech company between 2023 and 2025. The data reveals a troubling pattern: as AI capabilities advance, public trust diminishes. OpenAI, once viewed as a responsible leader in AI development, saw its trust score plummet by 13 points, while Elon Musk’s xAI suffered the steepest decline at 14 points.

Consumer trust scores for major AI companies showing decline from 2023 to 2025

This erosion of trust coincides with mounting evidence of artificial intelligence ethics violations across the industry. A comprehensive survey of business executives found that 86% are aware of instances where AI has resulted in ethical issues, while 47% of consumers report experiencing the impact of an ethical problem firsthand. The statistics paint a stark picture: only 47% of people globally believe that AI companies adequately protect personal data, down from 50% in 2023.

Meta’s Pirated Data Scandal and Artificial Intelligence Ethics Fallout

The trust crisis extends beyond mere statistics. High-profile incidents have shattered the tech industry’s carefully cultivated image of benevolent innovation. Meta faced explosive allegations of knowingly using pirated data to train its AI models, with internal documents revealing that CEO Mark Zuckerberg explicitly approved the use of datasets “we know to be pirated”. The lawsuit alleges that Meta systematically torrented copyrighted works and stripped copyright management information to conceal unauthorized usage.

CEO Controversies Expose Failures in Artificial Intelligence Ethics

The artificial intelligence ethics debate has placed Silicon Valley CEOs in an unprecedented spotlight, with several facing intense scrutiny over their companies’ practices and their personal approach to ethical considerations. Sam Altman, OpenAI’s CEO, has become perhaps the most polarizing figure in this debate, facing accusations that range from prioritizing profits over safety to creating a toxic corporate culture.

Whistleblower Revelations Highlight Artificial Intelligence Ethics Lapses

Internal documents and whistleblower accounts paint a disturbing picture of Altman’s leadership style. Former OpenAI executives, including Dario and Daniela Amodei, described his tactics as “gaslighting” and “psychological abuse” to those around them. These allegations gained additional credibility when Altman was briefly ousted from OpenAI’s board in November 2023, reportedly due to concerns about his leadership and communication regarding AI safety.

The controversy extends beyond individual personalities to fundamental questions about corporate governance in AI development. When asked about the moral authority to reshape humanity’s destiny through AI technology, Altman faced pointed criticism about OpenAI’s transformation from a nonprofit organization focused on benefiting humanity to a profit-driven entity aligned with Microsoft. This shift has sparked ongoing legal battles, including Elon Musk’s lawsuit alleging that OpenAI has become a “de facto subsidiary” of Microsoft.

Meta’s Mark Zuckerberg has faced his own ethical reckoning. During a December 2024 deposition regarding the company’s alleged use of pirated data, Zuckerberg admitted that torrenting copyrighted material would raise “lots of red flags” and “seems like a bad thing,” yet internal communications suggest the practice continued. The allegations have prompted 44 U.S. attorneys general to issue a stern warning to major AI companies, with Meta facing particularly sharp criticism for allegedly approving AI assistants capable of engaging in romantic roleplay with children as young as eight.

The Artificial Intelligence Ethics Implementation Paradox in Industry

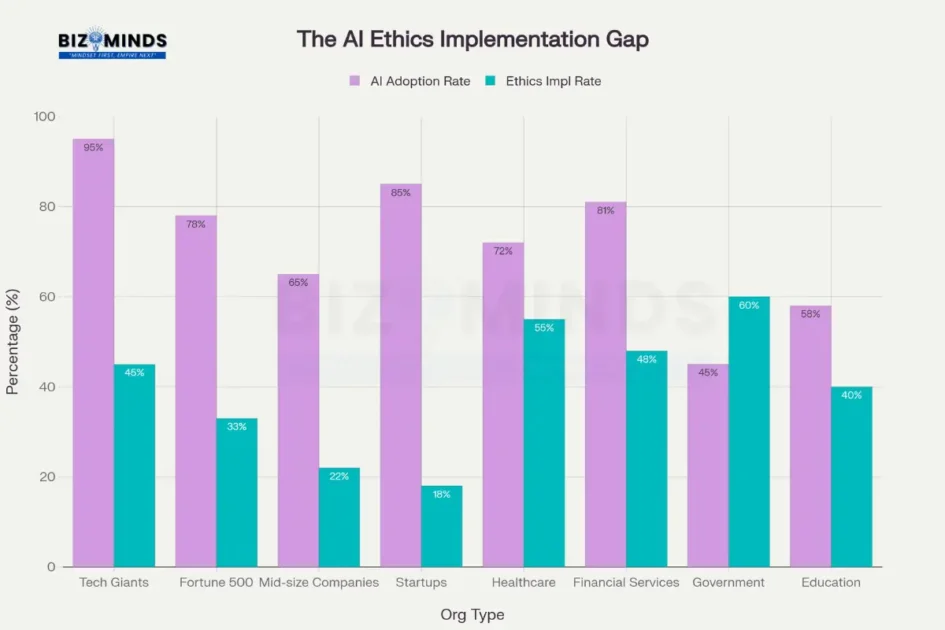

One of the most striking revelations in the artificial intelligence ethics debate is the massive gap between AI adoption and ethical implementation across organizations. While 78% of organizations reported using AI in at least one business function by 2025, only a fraction have implemented comprehensive ethical frameworks. This disparity is most pronounced among startups, where AI adoption rates reach 85% but ethics implementation languishes at just 18%.

Comparison of AI adoption rates versus ethics implementation across different organization types

Adoption Pressures Undermine Artificial Intelligence Ethics Standards

The rush to implement AI without adequate ethical safeguards has created a perfect storm of unintended consequences. Research indicates that 34% of organizations cite pressure to urgently implement AI as the primary reason behind ethical issues, while 33% admit that ethical considerations were simply not considered during AI system construction. This reckless approach to deployment has led to a cascade of problems ranging from algorithmic bias to privacy violations.

The healthcare sector presents a notable exception to this troubling trend, with ethics implementation rates of 55% closely tracking adoption rates of 72%. This higher level of ethical consciousness likely reflects the sector’s existing regulatory framework and the life-and-death consequences of medical decisions. However, even in healthcare, the FDA has struggled to keep pace with AI innovation, relying primarily on guidance documents rather than binding regulations to oversee AI-enabled medical devices.

Financial services companies face a similar regulatory environment, with 48% implementing ethical frameworks compared to 81% adopting AI technologies. The Securities and Exchange Commission has increased its oversight of AI applications in financial markets, particularly focusing on algorithmic trading and investment tools. However, the regulatory response remains fragmented and reactive rather than proactive.

Legal Battles Spotlight Artificial Intelligence Ethics Violations

The artificial intelligence ethics crisis has triggered an unprecedented wave of litigation that promises to reshape how AI technologies are developed, deployed, and regulated. Beyond the well-publicized copyright lawsuits, a new generation of legal challenges focuses on algorithmic bias, discrimination, and privacy violations.

Mobley v. Workday: Artificial Intelligence Ethics in Anti-Discrimination Law

The case of Mobley v. Workday has emerged as a landmark in AI bias litigation. Derek Mobley, an African American job applicant over 40 and disabled, alleged that Workday’s automated resume screening tool systematically discriminated against him despite his qualifications. In July 2024, a federal judge allowed the lawsuit to proceed, ruling that Workday’s software acts as an employer’s agent in the hiring process and participates in decision-making by recommending some candidates while rejecting others.

The implications of this ruling extend far beyond a single company. With 87% of organizations utilizing AI in their recruitment processes, the legal precedent could expose countless employers to discrimination claims. The Equal Employment Opportunity Commission’s decision to file an amicus brief supporting the plaintiff signals the federal government’s intent to hold AI companies accountable for discriminatory outcomes.

Similar patterns are emerging across multiple sectors. State Farm faces allegations that its AI-driven insurance claim process discriminated against Black homeowners, while Sirius XM confronts claims that its AI-powered hiring system perpetuated racial bias. These cases represent a fundamental shift in how courts view AI systems—not as neutral tools but as active participants in decision-making processes subject to existing anti-discrimination laws.

Navigating the U.S. Regulatory Maze for Artificial Intelligence Ethics

The artificial intelligence ethics debate has exposed the inadequacy of the current regulatory framework in the United States. Unlike the European Union’s comprehensive AI Act, which became effective in 2025 with provisions banning certain “unacceptable risk” AI applications, the U.S. has struggled to develop coherent federal oversight. This regulatory vacuum has left individual states to craft their own approaches, creating a patchwork of conflicting requirements that frustrate both companies and consumers.

California’s leadership in AI regulation reflects both its technological prominence and the failure of federal action. Despite the veto of SB 1047, the state continues to advance multiple AI-related bills addressing discrimination, copyright transparency, and child protection. Colorado has banned discriminatory insurance practices based on AI models, while Illinois requires employers to inform candidates about AI usage in hiring assessments.

FDA’s Role in Enforcing Artificial Intelligence Ethics in Medical Devices

The FDA represents one of the few federal agencies actively engaging with AI oversight, having authorized nearly 1,000 AI-enabled medical devices. However, the agency’s approach relies heavily on non-binding guidance documents rather than formal regulations, creating uncertainty about enforcement and compliance. The Supreme Court’s recent decisions limiting federal agency authority have further complicated the regulatory landscape, potentially restricting the FDA’s ability to adapt its oversight to emerging AI technologies.

The Federal Trade Commission under the Biden administration signaled an aggressive approach to AI regulation, warning that it may violate federal law to use AI tools with discriminatory impacts or make unsubstantiated claims about AI capabilities. The FTC’s enforcement action against Rite Aid, banning the company from using AI facial recognition technology without reasonable safeguards, demonstrated the agency’s willingness to take concrete action. However, the Trump administration’s AI Action Plan directs the FTC to review and potentially modify investigations and orders that may “unduly burden AI innovation,” creating uncertainty about future enforcement.

Global Impacts of Artificial Intelligence Ethics Standards

European Union’s AI Act Raises the Bar for Artificial Intelligence Ethics Worldwide

The artificial intelligence ethics debate in Silicon Valley occurs against a backdrop of intensifying international competition and regulatory pressure. The European Union’s AI Act has set a global standard for comprehensive AI regulation, with fines of up to 7% of global revenue or €35 million for violations. This extraterritorial reach means that any company interacting with EU citizens must comply with European standards, regardless of their location.

The emergence of China’s DeepSeek as a competitive threat has added urgency to the ethics debate. DeepSeek’s R1 model, which is open-source and significantly less costly to develop than comparable Western models, has prompted Silicon Valley to reassess its development strategies. The competitive pressure has intensified concerns that ethical considerations might be sacrificed in the race for AI supremacy.

Religious and moral leaders have also entered the debate, with Pope Leo XIV calling for AI companies to adhere to an “ethical criterion” that respects human dignity. The Vatican’s Rome Conference on AI brought together tech leaders from OpenAI, Anthropic, IBM, Meta, and Palantir to discuss the societal and ethical implications of artificial intelligence. This unprecedented engagement by religious institutions underscores the global recognition that AI ethics transcends technical considerations to touch fundamental questions of human values and dignity.

Artificial Intelligence Ethics and the Rising Deepfake Threat

Among the most disturbing developments in the artificial intelligence ethics crisis is the proliferation of deepfake technology for fraudulent and manipulative purposes. The sophistication and accessibility of AI-powered voice and video synthesis have created new categories of crime that law enforcement and regulators struggle to address.

Deepfake Scams Underscore Failures in Artificial Intelligence Ethics

Corporate executives have become prime targets for deepfake-enabled fraud. Jay Chaudhry, CEO of cybersecurity company Zscaler, experienced firsthand the threat when scammers used his cloned voice to deceive an employee into purchasing $1,500 worth of gift cards. This incident pales compared to a multinational firm that lost $25 million when a finance worker was duped by a deepfake video call featuring seemingly authentic high-ranking executives.

The statistics reveal the scope of the problem: 53% of finance professionals have been targeted by deepfake scams, with 43% admitting to falling victim to such attacks. The FBI has warned that cybercriminals use deepfakes in job interviews for remote technology positions to gain unauthorized access to sensitive information. These incidents highlight the existential threat that artificial intelligence-generated deception poses to business financial security.

The World Economic Forum identified AI-generated misinformation as one of the most severe threats in its “Global Risks Report 2024”. The potential for deepfakes to undermine democratic processes, manipulate financial markets, and destroy personal reputations has elevated this issue from a technical curiosity to a national security concern.

Balancing Innovation with Artificial Intelligence Ethics Safeguards

The artificial intelligence ethics debate has created a fundamental tension between the desire for continued innovation and the need for precautionary measures. Silicon Valley executives argue that excessive regulation could stifle American competitiveness in AI development, potentially ceding leadership to countries with less restrictive oversight.

Industry Pushback vs. Artificial Intelligence Ethics Safeguards

OpenAI’s Chief Strategy Officer Jason Kwon warned that California’s proposed safety bill “would threaten growth, slow the pace of innovation, and lead California’s world-class engineers and entrepreneurs to leave the state in search of greater opportunity elsewhere”. This sentiment reflects broader industry concerns that regulatory uncertainty and compliance costs could drive AI development to jurisdictions with more permissive approaches.

However, prominent AI researchers have challenged this innovation-versus-safety framing. Geoffrey Hinton and Yoshua Bengio, widely regarded as founders of modern AI, endorsed California’s safety bill and warned about the catastrophic risks of unregulated AI development. Their support for regulatory oversight reflects growing recognition among AI experts that the technology’s potential for harm requires proactive safeguards rather than reactive responses.

The debate has also exposed philosophical differences about the purpose and direction of AI development. While some executives focus on maximizing technological capabilities and commercial applications, others advocate for a more measured approach that prioritizes human welfare and social benefit. This tension was evident in Elon Musk’s criticism of OpenAI’s transformation from a nonprofit focused on benefiting humanity to a profit-driven entity.

Conclusion: Emerging Solutions for Robust Artificial Intelligence Ethics Governance

As the artificial intelligence ethics crisis continues to unfold, various stakeholders are proposing solutions to address the growing concerns while preserving the benefits of AI innovation. Industry leaders are beginning to acknowledge that ethical considerations cannot be treated as afterthoughts but must be integrated into the development process from the outset.

Appointing Chief AI Officers to Oversee Artificial Intelligence Ethics

Some organizations are establishing Chief AI Officer positions to spearhead the integration of ethics into business processes. This approach recognizes that AI governance requires dedicated leadership and cross-functional coordination to ensure ethical standards are maintained throughout the organization. However, only 13% of organizations report hiring AI ethics specialists, suggesting that most companies have yet to fully commit to responsible AI development.

The development of AI literacy programs and ethics training for employees represents another emerging solution. Organizations are recognizing that effective AI governance requires not only technical expertise but also broad understanding of ethical implications across all levels of the company. This educational approach aims to create a culture of responsibility rather than relying solely on compliance measures.

Frequently Asked Questions

Technological solutions are also emerging to address specific ethical concerns. Tools for bias detection, transparency enhancement, and continuous monitoring of AI systems are becoming more sophisticated. However, these technical fixes cannot address the fundamental questions of values and priorities that underlie many AI ethics challenges.

Q1: What specific ethical issues have Silicon Valley CEOs been most concerned about?

A: Silicon Valley CEOs are primarily focused on data privacy and security concerns (reported by 71% of organizations), AI bias and discrimination (63%), and transparency issues (49%). However, their public statements often emphasize competitive concerns and regulatory uncertainty rather than these underlying ethical problems.

Q2: How has the AI ethics crisis affected consumer trust in tech companies?

A: Consumer trust in AI companies has declined significantly, with every major tech company experiencing drops in trust scores between 2023 and 2025. OpenAI saw a 13-point decline, while Elon Musk’s xAI experienced the steepest drop at 14 points. Overall, only 47% of people globally believe AI companies adequately protect personal data.

Q3: What legal consequences are companies facing for AI ethics violations?

A: Companies are facing an unprecedented wave of litigation including discrimination lawsuits (like Mobley v. Workday), copyright infringement cases (such as the Meta pirated data allegations), and regulatory enforcement actions. The EEOC has actively supported plaintiffs in AI bias cases, while the FTC has banned companies like Rite Aid from using AI facial recognition without safeguards.

Q4: Why did California’s AI safety bill fail despite widespread concerns?

A: Governor Gavin Newsom vetoed California’s SB 1047 due to intense lobbying pressure from tech companies and concerns about stifling innovation. Critics argued the bill was too broad and could drive companies out of California. However, the veto has led to continued efforts at state-level AI regulation across multiple states.

Q5: How does the U.S. regulatory approach compare to other countries?

A: Unlike the EU’s comprehensive AI Act with binding regulations and substantial fines (up to 7% of global revenue), the U.S. lacks federal AI legislation and relies on a patchwork of state laws and agency guidance. This fragmented approach has created regulatory uncertainty while allowing continued industry self-regulation.

Q6: What can organizations do to address AI ethics concerns proactively?

A: Organizations should establish dedicated AI governance structures, implement regular bias audits, invest in ethics training for employees, and create cross-functional teams to oversee AI deployment. Only 27% of companies currently review all AI content before publishing, indicating significant room for improvement in oversight practices.

Citations

- https://www.scu.edu/execed/news/top-stories/values-in-the-age-of-ai-preparing-tomorrows-ethical-executives.html

- https://www.sovereignmagazine.com/science-tech/artificial-intelligence/controversy-erupts-over-safety-of-ai-models-in-top-tech-firms/

- https://www.linkedin.com/pulse/why-silicon-valley-fought-ai-safety-bill-so-hard-konstantin-simonchik-khpbe

- https://www.tripwire.com/state-of-security/future-ai-regulation-balancing-innovation-and-safety-silicon-valley

- https://www.npr.org/2024/09/20/nx-s1-5119792/newsom-ai-bill-california-sb1047-tech

- https://www.amraandelma.com/marketing-ai-ethics-statistics/

- https://www.semrush.com/blog/artificial-intelligence-stats/

- https://www.capgemini.com/gb-en/wp-content/uploads/sites/5/2022/05/AI-in-Ethics_Web.pdf

- https://hai-production.s3.amazonaws.com/files/hai_ai_index_report_2025.pdf

- https://www.artificialintelligence-news.com/news/meta-accused-using-pirated-data-for-ai-development/

- https://www.cnbc.com/2025/09/15/sam-altman-losing-sleep-open-ai-ceo-addresses-controversies-interview.html

- https://fortune.com/2025/06/20/openai-files-sam-altman-leadership-concerns-safety-failures-ai-lab/

- https://time.com/6986711/openai-sam-altman-accusations-controversies-timeline/

- https://www.businessinsider.com/elon-musk-openai-battle-triggered-moral-rift-in-silicon-valley-2024-3

- https://www.aljazeera.com/economy/2025/2/14/why-are-elon-musk-and-sam-altman-engaged-in-a-war-of-words-over-openai

- https://ciceros.org/2025/09/24/sam-altmans-bet-on-ai-promise-peril-and-the-pursuit-of-human-progress/

- https://economictimes.com/magazines/panache/top-us-lawyers-issue-stern-warning-to-tech-giants-google-meta-apple-openai-xai-on-ai-risks-to-children-in-candid-open-letter-weve-been-down-this-road-before-/articleshow/123568292.cms

- https://hai.stanford.edu/ai-index/2025-ai-index-report

- https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- https://www.ahima.org/education-events/artificial-intelligence/artificial-intelligence-regulatory-resource-guide/

- https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11649012/

- https://www.zartis.com/us-artificial-intelligence-regulations-in-2025-a-concise-summary/

- https://www.fisherphillips.com/en/news-insights/another-employer-faces-ai-hiring-bias-lawsuit.html

- https://www.traverselegal.com/blog/ai-litigation-beyond-copyright/

- https://fairnow.ai/workday-lawsuit-resume-screening/

- https://www.forbes.com/sites/janicegassam/2025/06/23/what-the-workday-lawsuit-reveals-about-ai-bias-and-how-to-prevent-it/

- https://mojoauth.com/blog/silicon-valley-is-facing-a-new-ai-threat/

- https://qz.com/ai-eu-european-union-regulations-silicon-valley-1851765829

- https://www.politico.com/news/2025/05/12/how-big-tech-is-pitting-washington-against-california-00336484

- https://praxis.ac.in/californias-bold-ai-bill-shaping-the-future-of-tech-regulation/

- https://www.whitecase.com/insight-our-thinking/ai-watch-global-regulatory-tracker-united-states

- https://www.cnn.com/2025/06/20/tech/pope-leo-ai-ethics-tech-leader-vatican-gathering

- https://www.capitalbrief.com/article/risks-from-ai-deepfakes-are-growing-says-cybersecurity-ceo-whose-voice-was-used-to-scam-his-own-staff-efd0b1d2-ecaf-4bb1-9c81-0f620b6cf7b8/

- https://complexdiscovery.com/deepfake-technology-fuels-global-misinformation-and-fraud/

- https://www.npr.org/2023/04/27/1172387911/how-can-people-spot-fake-images-created-by-artificial-intelligence

- https://www.vox.com/future-perfect/355212/ai-artificial-intelligence-1047-bill-safety-liability

- https://www.ekimetrics.com/articles/ai-insight-value-performance-regulation

- https://www.forbes.com/sites/committeeof200/2025/02/04/ai-governance-the-ceos-ethical-imperative-in-2025/

- https://perkinscoie.com/insights/update/implications-california-governor-newsoms-veto-ai-safety-bill-sb-1047

- https://www.linkedin.com/pulse/ai-under-fire-legal-ethical-controversies-shake-tech-world-naveed-95ecc

- https://digitaldefynd.com/IQ/top-ai-scandals/

- https://www.linkedin.com/pulse/what-ai-ceo-rise-artificial-intelligence-executive-andre-bxnie

- https://www.techtarget.com/searchenterpriseai/tip/Generative-AI-ethics-8-biggest-concerns

- https://www.washingtonpost.com/opinions/2025/02/12/silicon-valley-artificial-intelligence-ethics/

- https://www.coursera.org/in/articles/ai-ethics

- https://www.linkedin.com/pulse/silicon-valleys-power-how-ai-ethics-became-global-order-motta-jryyc

- https://iac.gatech.edu/featured-news/2023/08/ai-ethics

- https://economictimes.com/tech/artificial-intelligence/how-silicon-valley-is-using-religious-language-to-talk-about-ai/articleshow/123585046.cms

- https://news.harvard.edu/gazette/story/2020/10/ethical-concerns-mount-as-ai-takes-bigger-decision-making-role/

- https://www.wired.com/story/meta-scores-victory-ai-copyright-case/

- https://aimagazine.com/news/the-story-behind-elon-musks-xai-grok-4-ethical-concerns

- https://www.mckoolsmith.com/newsroom-ailitigation-13

- https://www.forbes.com/sites/tomchavez/2024/01/31/openai–the-new-york-times-a-wake-up-call-for-ethical-data-practices/

- https://techpolicy.press/chatgpt-safety-in-artificial-intelligence-and-elon-musk

- https://www.npr.org/2025/04/29/nx-s1-5377353/google-antitrust-remedies-trial-ai

- https://timesofindia.indiatimes.com/technology/tech-news/would-that-be-enough-to-convince-you-openai-ceo-sam-altman-and-father-of-quantum-computing-debate-ais-true-test/articleshow/124103662.cms

- https://technologymagazine.com/news/the-story-behind-elon-musks-xai-grok-4-ethical-concerns

- https://www.goodwinlaw.com/en/insights/publications/2025/06/alerts-practices-aiml-northern-district-of-california-judge-rules

- https://economictimes.com/magazines/panache/sam-altman-shares-his-strangest-experience-openai-ceo-sounds-alarm-on-a-bizarre-human-ai-relationship-he-did-not-see-coming-a-year-ago/articleshow/123790775.cms

- https://siliconvalley.center/blog/ethical-implications-of-ai

- https://www.linkedin.com/posts/imranahmadnrf_ai-safety-bill-passed-by-california-legislature-activity-7373302093853417473-CoHV

- https://www.elon.edu/u/imagining/surveys/xii-2021/ethical-ai-design-2030/

- https://timesofindia.indiatimes.com/business/india-business/most-companies-see-no-major-payoff-from-ai-shows-survey/articleshow/124096275.cms

- https://en.wikipedia.org/wiki/Safe_and_Secure_Innovation_for_Frontier_Artificial_Intelligence_Models_Act

- https://carnegiecouncil.org/media/article/silicon-valley-ai-ethics-wendell-wallach

- https://ff.co/ai-statistics-trends-global-market/

- https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2022.1042661/full

- https://www.winston.com/en/insights-news/navigating-californias-sb-1047-implications-for-ai-regulation-and-industry-impact

- https://www.siam.org/publications/siam-news/articles/artificial-intelligence-ethics-versus-public-policy/

- https://explodingtopics.com/blog/ai-statistics

- https://siliconvalley.center/blog/exploring-the-ethical-landscape-of-artificial-intelligence-voices-from-the-field

- https://torrenzano.com/ai-puts-general-counsel-at-the-center-of-corporate-survival/

- https://www.reuters.com/technology/race-towards-autonomous-ai-agents-grips-silicon-valley-2023-07-17/

- https://www.quinnemanuel.com/the-firm/publications/when-machines-discriminate-the-rise-of-ai-bias-lawsuits/

- https://www.pewresearch.org/internet/2021/06/16/1-worries-about-developments-in-ai/

- https://www.lawandtheworkplace.com/2025/06/ai-bias-lawsuit-against-workday-reaches-next-stage-as-court-grants-conditional-certification-of-adea-claim/

- https://www.fda.gov/media/184830/download

- https://blog.venturemagazine.net/your-ceo-just-called-or-did-they-indias-deepfake-scam-epidemic-hits-the-boardroom-0a1a166fa1e5

- https://research.aimultiple.com/ai-bias/